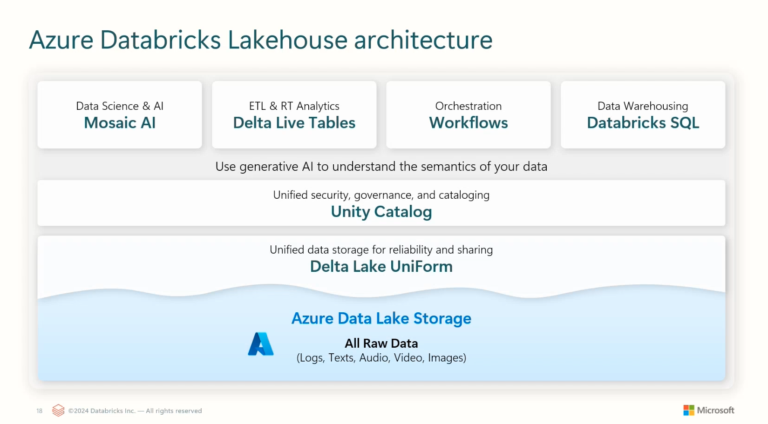

Data is being generated at an unprecedented rate, and traditional methods often struggle to keep up. This makes big data processing essential for businesses aiming to thrive. Databricks, a powerful Apache Spark-based analytics platform, simplifies the processing of massive datasets, enabling quick and efficient analysis, particularly for big data and machine learning tasks.

However, the real value of data lies in making it accessible and actionable. This is where Power BI comes in. As a leading business intelligence tool, Power BI is renowned for its interactive dashboards, rich visualizations, and user-friendly interface. It allows users to connect to various data sources, transform raw data into meaningful insights, and share them effortlessly.

By connecting Databricks to Power BI, you can unlock the full potential of your data, turning complex information into insightful visuals that drive better decision-making. In this article—and the accompanying video—I’ll guide you through the step-by-step process of integrating these two powerful tools, making data migration and integration seamless and efficient.

Setting up your cluster

To connect Power BI to Databricks, the first step is to set up a Databricks cluster. A cluster in Databricks is a set of computation resources that provides the processing power for your analytics. Here’s a quick guide on how to set up a cluster:

Step 1: Log into Databricks

First, log into your Databricks account. You can access Databricks from the location you’ve provisioned it or directly via the Databricks website if you have a standalone account.

Step 2: Navigate to the Clusters Page

Once logged in, go to the Clusters page. You can find this option in the left-hand navigation pane. This page shows all the clusters currently set up in your workspace.

Step 3: Create a New Cluster

Click the Create Cluster button at the top of the Clusters page. This will open a new cluster configuration form.

Step 4: Configure the Cluster

When setting up your Databricks cluster, start by giving it a descriptive name that clearly reflects its purpose. Next, choose the appropriate cluster mode—either “Standard” for most use cases or “High Concurrency” if needed.

After doing this, select the Databricks Runtime Version that best meets your requirements, taking into account the Apache Spark version and any additional libraries you might need. Consider enabling autoscaling, which automatically adjusts the number of worker nodes based on your workload.

Choose the appropriate instance type for both driver and worker nodes, depending on your performance and workload needs. Determine the minimum and maximum number of worker nodes your cluster should have.

Finally, explore the advanced options, where you can configure additional settings like custom tags, logging, and initialization scripts if necessary.

Step 5: Launch the Cluster

After configuring the cluster, click Create Cluster. Databricks will start the process of setting up your cluster, which may take a few minutes. Once the cluster is running, it’s ready for use.

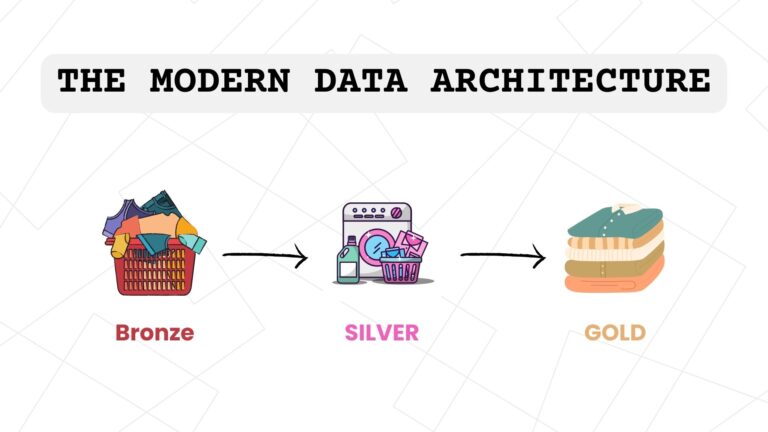

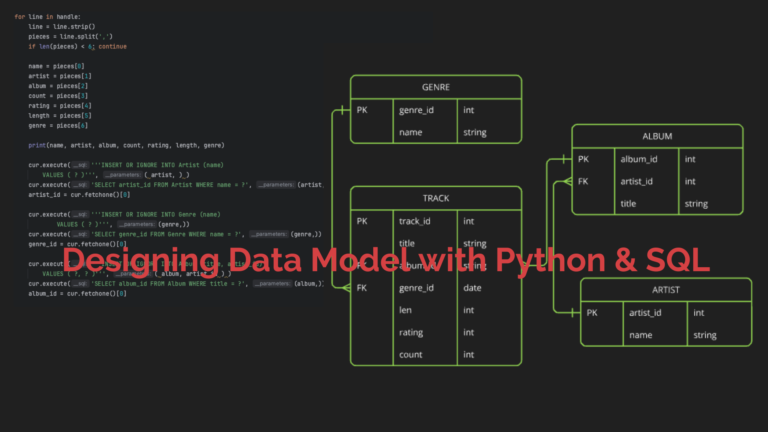

Before diving into the specifics, it’s crucial to understand the purpose of this step. Here, we are creating a notebook designed to load data into Hive tables. The primary goal of these scripts is to set up these tables, which we will later connect to Databricks.

Since the focus of this article isn’t on the script itself, I’ll briefly explain its purpose and the expected outcome. Essentially, the script helps prepare your data in Hive, which you’ll need when connecting to Power BI.

Once you understand the purpose, you can experiment with the script. I’ve provided the script in my GitHub repository for you to try it out. It’s important to have this setup if you plan to connect an existing file to Power BI. You can access the script here.

Create a database

A bit of data cleaning will be performed. As shown here, all spaces have been replaced with underscores, and any ampersands have been changed to “and.” This basic cleaning allows the data to be written as an SQL table into the SQL database.

# Rename the columns to remove spaces

renamed_columns = [

col.replace(" ", "_").replace("&", "And") for col in df_client.columns

]

df_client = df_client.toDF(*renamed_columns)

# Display the data

display(df_client)A new database will be created, and this database should bear anything you choose for it too. We can say “tutorials_database”.

create database if not exists tutorials_databaseUpon clicking, it will show that the database has been successfully created. This database name will be assigned to a variable called “database_name”.

database_name = "david_ezekiel_tutorials"Next, the client data frame will be written as a table. The table will be named “customer” and attached to the database “tutorials_database” . This setup will be executed.

df_client.write.mode("overwrite").saveAsTable(database_name + ".customer")After running this, a quick check of the catalog will confirm the table’s creation. Refreshing the catalog will show that both the database and the table have been successfully created.

To view the created table, the following can be used:

select

*

from

david_ezekiel_tutorials.customerYou can then run your query on the newly created table.

Connect Databricks to Power BI

You might be wondering, “How do I connect Databricks to Power BI?” First, click on the “Catalog” option in your Databricks workspace. After refreshing the page, you should see that your database has been successfully created and the table you have has also been created.

On the top right of your screen, you’ll find a feature called “Use with BI tools”. Click on this drop-down menu, and you’ll see options including “Open with Power BI” and “Open with Tableau”.

For this guide, we’re going to focus on connecting to Power BI, so you’ll want to click on “Open with Power BI”.

Remember, your choice here depends solely on what BI tool you’re connecting to at that time. If you’re using a different tool, simply select the appropriate option from the dropdown.

There are two ways to connect Databricks to Power BI: automatic connection and manual connection. The automatic connection method simplifies the process by using the “Open with Power BI” option in Databricks, which sets up most of the connection details for you. On the other hand, the manual connection requires you to enter the connection details yourself directly in Power BI, giving you more control but requiring more effort. Let’s start by exploring the automatic connection method, which is generally easier for most users.

The connection file will be downloaded and double-clicked to open. Ensure all account credentials are ready, as a prompt for them is likely.

In Power BI, the Azure Active Directory will be used to connect. This will display the database name and the “customer” table. If there were more tables, they would appear here as well. The table will be selected, and the “Load” button will be clicked.

….

Now that the data has been loaded, it can be viewed in Power BI. Currently, it is in direct query mode. If desired, one can switch it to import mode by navigating to “Transform Data”, then “Data Source Settings” and selecting “Switch all tables to import mode”. This action caches the data within Power BI Desktop. Finally, clicking on “Close and Apply” will apply these changes.

Now, the table is visible here, displaying all the data.

Next, another method for achieving this connection will be demonstrated.

Begin by starting a new Power BI report. Click on “Get Data” choose “Databricks” and establish the connection.

To find the server host and path, return to Databricks, open the cluster, and click on “Advanced Options.” Within the “JDBC/ODBC” section, copy the server name and paste it into Power BI as the server name. Repeat this step for the HTTP path. Then, select “Import Connection” and click “OK”. This action will display all the tables that have been created. From there, choose the desired database and the “customer” table.

Users can connect to Power BI either directly or by downloading the connection file from Databricks and running it automatically. Whichever method is chosen, this blog aims to provide a helpful starting point. Connecting data in Power BI unlocks numerous possibilities for analysis and visualization, facilitating the extraction of insights and informed, data-driven decision-making.

Give it a try and see how seamless the process can be. And if you run into any issues or have any questions, feel free to comment below. I’d love to hear about your experiences and any tips you discover.

To find out more amazing things you do with Databricks, you can read them here using this link

Thanks for following along. Happy data exploring!