Table of Contents

The Azure Databricks Lakehouse architecture is changing how data lakes and data warehouses work, transforming how organizations store, analyze, and unlock the full potential of their data. By combining the scalability of data lakes with the precision of data warehouses, this unified platform is not just streamlining workflows—it’s paving the way for a future where data isn’t just managed but mastered. Built on cutting-edge tools like Delta Lake UniForm, Unity Catalog, and Databricks SQL, the Lakehouse architecture redefines what’s possible in data management.

In this article, I will provide a general overview of the Azure Databricks Lakehouse architecture and introduce you to its various components. Understanding this overview could help you do more with your data—whether it’s unifying storage, enabling real-time analytics, or scaling machine learning workflows. You can also watch the video embedded in the article for a more detailed walkthrough.

Now, let’s break it down so you can see why this architecture is a must-have for modern data-driven businesses.

1. The Foundation: Azure Data Lake Storage

At the core of the Lakehouse architecture is Azure Data Lake Storage. Consider this the central repository where all your raw data lives—logs, text files, images, videos, audio, and more. It’s the ultimate storage solution for everything your business needs to operate and grow.

But here’s the catch: raw data alone isn’t enough. It’s like having a massive dataset but no schema or structure to organize it—you have all the information, but without the right framework, extracting meaningful insights is difficult. To unlock the true value of your data, you need structure, reliability, and tools to process it effectively. That’s where the rest of the Lakehouse architecture comes into play.

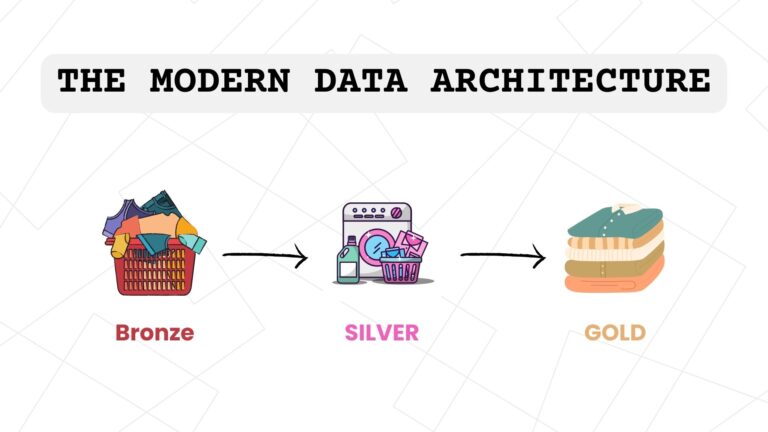

2. Delta Lake UniForm: Bringing Structure and Reliability

Working with massive datasets can be challenging, especially when you need consistency and reliability across different tools and platforms. Delta Lake UniForm allows you to add structure to your raw data, ensuring it’s consistent, reliable, and ready for fast, scalable processing.

The best part? Delta Lake UniForm works seamlessly across various data platforms, whether you’re using Databricks, Azure Synapse, or other query services. It eliminates the headaches of data duplication or corruption, giving you peace of mind and a solid foundation for your analytics and machine learning initiatives.

3. Unity Catalog: Centralized Governance and Security

In today’s data-driven world, security and governance are non-negotiable. That’s where Unity Catalog comes in. It acts as a centralized control center for your metadata, ensuring your data is secure, well-organized, and accessible only to authorized users.

With Unity Catalog, you can:

1. Set up role-based access controls to manage who can access what.

2. Use audit logs to track data access and usage.

3. Trace data lineage to understand where your data comes from and how it’s being used.

This level of control not only keeps your data safe but also ensures compliance with industry regulations, making it a critical component of the Lakehouse architecture.

4. The Power Tools: Unlocking the Potential of Your Data

Now that we’ve covered the foundation, let’s look into the tools that make the Lakehouse architecture truly shine. These tools sit at the top of the stack and are designed to help you extract maximum value from your data.

- Machine Learning and Artificial Intelligence

If you’re leveraging machine learning (ML) or artificial intelligence (AI), you’ll love what Azure Databricks has to offer. Mosaic AI is a robust AI and data science platform that allows you to build, train, and deploy models at scale. Whether you’re predicting customer behavior, optimizing operations, or uncovering hidden insights, this tool empowers you to turn data into actionable intelligence.

- Delta Live Tables: Simplifying Real-Time ETL

ETL (extract, transform, load) processes are essential but can often be time-consuming and complex. Delta Live Tables changes the game by automating data transformations and pipeline management in real time. It eliminates manual effort, reduces errors, and ensures your data is always ready for analysis.

- Workflows: Automating Your Data Pipelines

Imagine having a personal assistant for your data operations. That’s exactly what Workflows provides. This tool automates end-to-end pipeline execution, ensuring everything runs smoothly and scales effortlessly. It’s perfect for businesses looking to streamline their data processes and focus on insights rather than infrastructure.

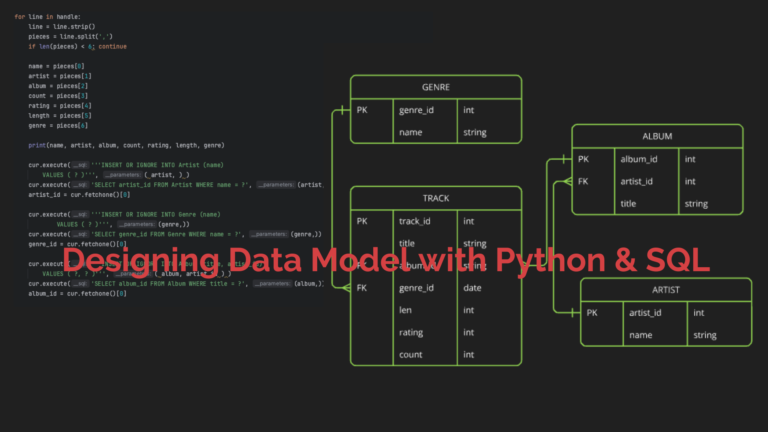

- Databricks SQL: High-Performance Analytics

Need to run SQL queries on massive datasets? Databricks SQL is your go-to solution. It’s a high-performance data warehouse tool that delivers fast, efficient results, making it ideal for reporting, dashboards, and advanced analytics.

Why the Lakehouse Architecture Should Belong in Your Toolbox

So, why is the Azure Databricks Lakehouse architecture such a big deal? It’s simple: it brings together the best of both worlds—data lakes and data warehouses—into one unified platform. With Azure Data Lake Storage as the foundation, Delta Lake UniForm ensuring consistency, Unity Catalog keeping things secure, and a suite of powerful tools for processing and analytics, it’s a complete solution for modern data needs.

The Azure Databricks Lakehouse architecture isn’t just another data solution—it’s a comprehensive platform designed to meet the demands of modern businesses. Combining storage, processing, governance, and analytics into a single ecosystem simplifies complexity and empowers organizations to unlock the full potential of their data.

Whether you’re building machine learning models, automating pipelines, or just trying to make sense of your data, the Lakehouse architecture has something for everyone. And the best part? It’s designed to scale with your business, so you’re always ready for what’s next.