Table of Contents

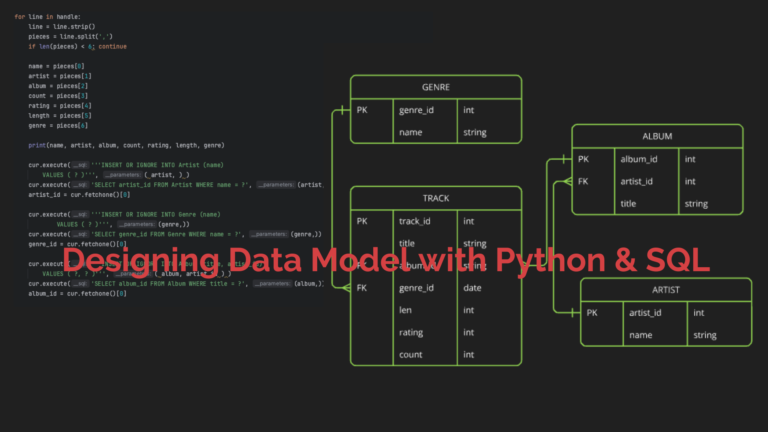

The Medallion Architecture is a multilayered framework that ensures data quality and manageability as data flows from raw sources to analytics-ready datasets. In this article, I will explain the Medallion Architecture and demonstrate how to design a data pipeline using this framework. For a more detailed walkthrough, you can also watch the video embedded in the article.

Evolution of Data Management

In the early days of computing, data storage and retrieval were basic, relying on physical mediums like punch cards and magnetic tapes. Accessing and managing data was slow and labor-intensive, with limited scalability and efficiency. The 1970s brought a transformative shift with the introduction of relational databases, which organized data into structured tables and enabled querying through SQL (Structured Query Language). This innovation revolutionized data management, offering businesses a more efficient and scalable way to handle information. Data warehouses were introduced in the 1980s and 1990s, centralizing structured data for advanced business intelligence and analytics, further solidifying the foundation for modern data systems.

The 2000s brought in the era of Big Data, driven by the internet and social media, leading to an explosion in data volume, velocity, and variety. Tools like Hadoop addressed the need to process massive, unstructured datasets but introduced challenges in performance and schema management. The 2010s saw the rise of cloud computing and Apache Spark, which improved scalability and real-time processing capabilities. Data lakes became popular for their flexibility but often struggled with governance and data quality. Today, businesses face the challenge of managing complex data ecosystems, necessitating structured approaches like the Medallion Architecture. This framework systematically ingests, cleans, and enriches data, ensuring reliability, scalability, and governance, making it essential for modern data management.

What is the Medallion Architecture?

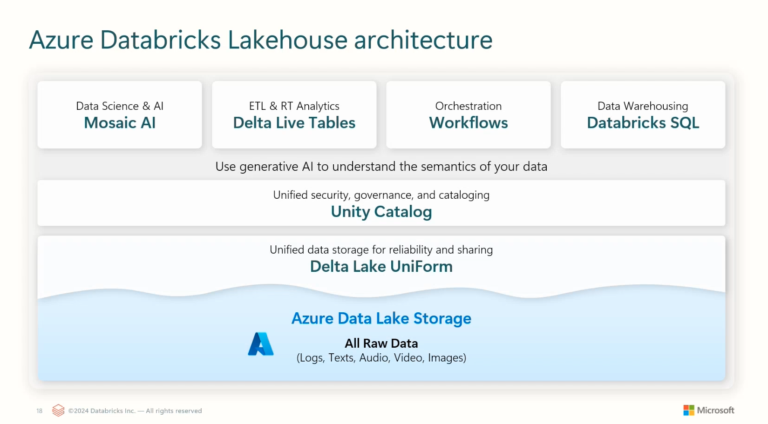

The Medallion Architecture, a term coined by Databricks, is a data design pattern used to logically organize data. The goal is to incrementally and progressively improve the structure and quality of data as it flows through each layer of the architecture—from Bronze to Silver to Gold. Each layer tackles a specific stage in the data’s journey, progressively refining and improving its structure and quality.

Just the way precious metals have to pass through several stages of refinement to create a “Medallion”, raw data passes through the stages of refinement to create fully processed, ready-to-use data. The name symbolizes the purification and curation of a raw material into something useful and valuable.

The Three Levels of Medallion Architecture

The Medallion Architecture organizes data into three progressive layers: the Bronze Layer, where raw data from sources like cloud storage or IoT devices is ingested in its original form, retaining all historical data for reprocessing and auditing; the Silver Layer, where raw data is cleaned, validated, and transformed through processes like deduplication, schema enforcement, and data standardization to improve quality; and the Gold Layer, where data is fully refined, aggregated, and optimized for business use, modeled for specific domains like sales or finance, and designed to support reporting, dashboards, and machine learning models.

The Bronze Layer

The Bronze layer is where data from various sources—such as cloud storage, Kafka, or Salesforce—arrives in its raw form. Think of it as a laundry basket where you load all your used clothes in their raw state. The Bronze layer contains raw, unvalidated data with the following characteristics:

- It maintains the raw state of the data source in its original format.

- It is appended incrementally and grows over time.

- It is intended for consumption by workloads that enrich data for Silver tables, not for access by analysts and data scientists.

- It Enables reprocessing and auditing by retaining all historical data.

- It can be any combination of streaming and batch transactions from sources like cloud object storage (e.g., S3, GCS, ADLS), message buses (e.g., Kafka, Kinesis), and federated systems.

This layer is primarily used by data engineers, data operations, and compliance and audit teams.

The Silver Layer

Once data is ingested, it moves into the Silver layer, where data quality issues are addressed. Data is read from one or more Bronze or Silver tables and written into Silver tables. Key processes in the Silver layer include:

- Deduplication of records to avoid multiple entries of the same data.

- Schema Enforcement and Type Casting to ensure each field is consistent.

- Joining & Merging datasets, such as combining customer details with transaction logs for a comprehensive view.

- Data Standardization—handling null values or quarantining erroneous records.

This layer is used by data engineers, data analysts, and data scientists for more refined datasets that retain detailed information necessary for in-depth analysis.

The Gold Layer

The final stop is the Gold layer, where data is fully refined and prepared for analytics, dashboards, or ML models. The Gold layer models data for reporting and analytics using a dimensional model by establishing relationships and defining measures. Analysts with access to data in the Gold layer should be able to find domain-specific data and answer questions. Additional tasks in this layer include:

- Aggregations for key metrics, such as total sales or average customer spending.

- Performance Optimization with partitioning and indexing to ensure quick query responses.

This layer is used by business analysts, BI developers, data scientists, ML engineers, executives, decision-makers, and operational teams.

Example of Medallion Architecture

This example from Microsoft documentation of medallion architecture shows bronze, silver, and gold layers for use by a business operations team. Each layer is stored in a different schema of the ops catalog. You can read the documentation here.

- Bronze layer (ops.bronze): Ingests raw data from cloud storage, Kafka, and Salesforce. No data cleanup or validation is performed here.

- Silver layer (ops.silver): Data cleanup and validation are performed in this layer.

- Data about customers and transactions is cleaned by dropping nulls and quarantining invalid records. These datasets are joined into a new dataset called customer_transactions. Data scientists can use this dataset for predictive analytics.

- Similarly, accounts and opportunity datasets from Salesforce are joined to create account_opportunities, which are enhanced with account information.

- The leads_raw data is cleaned in a dataset called leads_cleaned.

- Gold layer (ops.gold): This layer is designed for business users. It contains fewer datasets than silver and gold.

- customer_spending: Average and total spend for each customer.

- account_performance: Daily performance for each account.

- sales_pipeline_summary: Information about the end-to-end sales pipeline.

- business_summary: Highly aggregated information for the executive staff.

Best Practices for Implementing the Medallion Architecture

Implementing the Medallion Architecture effectively requires adherence to best practices. Here are some recommendations from Microsoft:

1. Layer-by-Layer Security

Establishing clear boundaries for each Medallion layer is foundational to ensuring data integrity and security when setting up your lakehouse. The Medallion Architecture—comprising Bronze (raw data), Silver (cleaned and enriched data), and Gold (curated, business-ready data)—requires distinct zones to manage access and permissions effectively.

- By implementing a separation of concerns through distinct schemas or catalogs for each data layer, strict access controls can be enforced, ensuring data engineers have write access to Bronze and Silver layers for raw data ingestion and transformation, while only authorized analytics professionals or data stewards can publish or update Gold layer data, maintaining high-quality, trusted data and minimizing errors or unauthorized changes. Centralized identity management tools like Azure AD or AWS IAM enable granular role and permission definitions, restricting access to sensitive datasets or schema alterations to specific users or groups, enhancing security and simplifying regulatory compliance. Additionally, for highly sensitive data, techniques like data masking or encryption at rest and in transit can be applied, such as masking PII in the Bronze layer before moving it to Silver, ensuring only authorized users access the original data.

2. Governance and Compliance

As your data ecosystem grows, so does the complexity of managing compliance and governance. A well-defined governance framework is essential to maintain trust in your data and meet regulatory standards.

- Data classification and tagging enable dynamic security policies by labeling datasets based on sensitivity (e.g., PII, financial data, or intellectual property), allowing measures like row-level security (RLS) to restrict access to specific rows or column-level encryption for sensitive fields such as Social Security numbers or credit card details. Comprehensive audit logs are essential for tracking dataset changes, answering questions like “Who accessed this data?”, “When was it modified?”, and “What changes were made?”, providing transparency crucial for compliance with standards like GDPR, HIPAA, or CCPA, with tools like Azure Purview or AWS Lake Formation automating data lineage and access pattern tracking. Additionally, data retention policies ensure data is stored only as long as necessary, reducing storage costs and compliance risks—for example, raw logs in the Bronze layer might be retained for 30 days, while curated Gold data could be stored indefinitely for business analytics.

3. Sustained Data Quality

High-quality data is the backbone of any successful data initiative. Without it, downstream analytics, machine learning models, and business decisions are compromised.

- Automated validation rules embedded directly into data pipelines ensure only clean, accurate data progresses through the Medallion layers by checking for schema conformance, null values, duplicate records, or unexpected patterns (e.g., negative sales figures), with tools like Delta Live Tables on Databricks or Great Expectations formalizing these checks. When records fail validation, they should be quarantined in a separate error table for investigation rather than discarded, enabling data engineers to resolve issues without disrupting the pipeline and uncovering systemic problems like upstream data source issues or gaps in data collection over time. Additionally, regular data profiling—calculating metrics like completeness, uniqueness, and distribution—helps identify quality issues early, such as a sudden drop in data completeness signaling potential problems with an upstream API or ETL process.

4. Performance Optimization

At scale, performance bottlenecks can hinder productivity and increase costs. Optimizing your lakehouse architecture ensures that it remains efficient and responsive as data volumes grow.

- Partitioning tables by high-cardinality fields (e.g., date, region, or customer ID) enables query engines like Spark or Presto to skip irrelevant partitions, significantly speeding up queries, while creating indexes on frequently queried columns further enhancing performance. Autoscaling compute clusters ensures cost efficiency by dynamically adjusting resources based on demand, such as scaling up during large batch ingestion jobs and scaling down during off-peak periods, balancing performance and cost. Additionally, for frequently accessed datasets, leveraging data caching or materialized views—especially for Gold layer data often queried by business analysts and reporting tools—can effectively reduce query latency.

5. Monitoring and Observability

A robust monitoring strategy is essential for maintaining the health and reliability of your data pipelines.

- Pipeline health dashboards should be set up to monitor key metrics like load times, error rates, throughput, and resource utilization (CPU, memory, disk I/O), providing real-time visibility into pipeline performance and helping identify bottlenecks or failures. Integrating alerting tools such as Slack, PagerDuty, or email notifications ensures the team is promptly informed of pipeline failures, data freshness issues, or resource constraints, enabling immediate investigation and resolution of critical issues like failed data ingestion jobs. Additionally, regular log analysis helps uncover patterns or anomalies indicating deeper problems, such as upstream data source issues or unauthorized access attempts, with machine learning-based anomaly detection tools automating this process by flagging unusual activity for further investigation.

6. Cost Management

Cost efficiency is a critical consideration for any data strategy, especially in cloud environments where expenses can quickly spiral out of control.

- Right-sized compute clusters should be selected to match job complexity, avoiding overpayment—small clusters for development and testing, while larger ones are reserved for production workloads. Auto-termination and scheduling can significantly reduce costs by shutting down idle clusters during off-hours, such as configuring development clusters to terminate overnight or on weekends when unused. For non-critical tasks like development, testing, or batch processing, spot instances (AWS) or preemptible VMs (Google Cloud) offer cost-effective options, though they can be interrupted and are unsuitable for production. Additionally, storage optimization—through archiving or deleting unused data, compressing files, or moving infrequently accessed data to cheaper tiers like AWS S3 Glacier or Azure Cool Blob Storage—helps manage costs efficiently.

By implementing these best practices, you can build a secure, efficient, and cost-effective lakehouse architecture that scales with your organization’s needs while maintaining high standards of data quality and governance.

Conclusion

The Medallion Architecture offers a structured, scalable, and efficient framework for transforming raw data into actionable business insights. By progressively refining data through its Bronze, Silver, and Gold layers, organizations can ensure data quality, improve governance, and enable faster, more informed decision-making.

Whether you’re handling massive datasets, integrating diverse data sources, or preparing data for advanced analytics, the Medallion Architecture provides a clear pathway to success. As data continues to grow in volume and complexity, adopting this architecture can help your organization stay competitive, agile, and data-driven.